Optimisation problems

However, it might be that for a given task overfitting is of no concern, but there are still instabilities in loss function convergence happening during the training1. The loss landscape is a complex object having multiple local minima and which is moreover not at all understood due to the high dimensionality of the problem. That makes the gradient descent procedure of finding a minimum not that simple. However, if instabilities are observed, there are a few common things which could explain that:

-

The main candidate for a problem might be the learning rate (LR). Being an important hyperparameter which steers the optimisation, setting it too high make cause extremily stochastic behaviour which will likely cause the optimisation to get stuck in some random minimum being way far from optimum. Oppositely, setting it too low may cause the convergence to take very long time. The optimal value in between those extremes can still be problematic due to a chance of getting stuck in a local minimum on the way towards a better one. That is why several approaches on LR schedulers (e.g. cosine annealing) and also adaptive LR (e.g. Adam being the most prominent one) have been developed to have more flexibility during the training, as opposed to setting LR fixed from the very beginning of the training until its end.

-

Another possibility is that there are NaN/inf values or uniformities/outliers appearing in the input batches. It can cause the gradient updates to go beyond the normal scale and therefore dramatically affect the stability of the loss optimisation. This can be avoided by careful data preprocessing and batch formation.

-

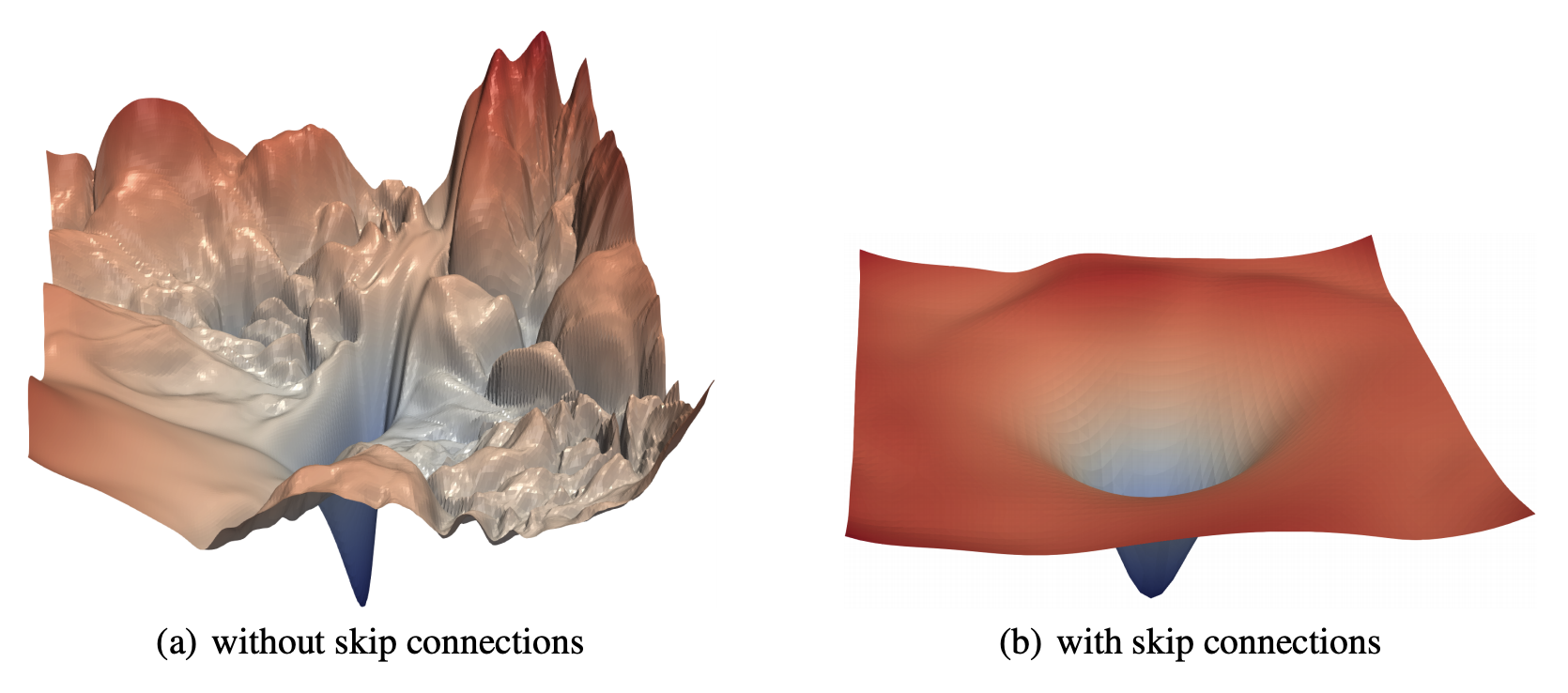

Last but not the least, there is a chance that gradients will explode or vanish during the training, which will reveal itself as a rapid increase/stagnation in the loss function values. This is largely the feature of deep architectures, where during the backpropagation gradients are accumulated from one layer to another, and therefore any minor deviations in scale can exponentially amplify/diminish as they get multiplied. Since it is the scale of the trainable weights themselves which defines the weight gradients, a proper weight initialisation can foster smooth and consistent gradient updates. Also, batch normalisation together with weight standartization showed to be a powerful technique to consistently improve performance across various domains. Finally, a choice of activation function is particularly important since it directly contributes to a gradient computation. For example, a sigmoid function is known to cause gradients to vanish due to its gradient being 0 at large input values. Therefore, it is often suggested to stick to classical ReLU or try other alternatives to see if it brings improvement in performance.